Two Important Reasons Not to Migrate to the Cloud

Today, it seems like everyone has an opinion on how moving data and workloads to public cloud services is a good idea. What’s not often discussed is why, in some cases, public cloud isn’t a good fit.

Don’t get me wrong. The public cloud provides numerous benefits, and it makes sense for many organizations – but not all. For companies with applications that require optimal performance, variability and limitations of public cloud can be disruptive.

Here are two of the biggest reasons IT leaders are questioning if public cloud is right for their mission-critical applications.

Reason #1: Performance is a crapshoot. You never know what you’re going to get.

Whether your data and processes run on dedicated infrastructure or are distributed across virtual machines, the underlying physical servers limit the resulting performance. What’s running on them? Who else is taxing their resources? How are they being accessed?

Public cloud computing requires the use of a management and orchestration layer of applications to operate and distribute the workload. This overhead requires server resources to operate, eating-up capacity and creating variability in performance as some processes may be distributed across multiple data centers over time.

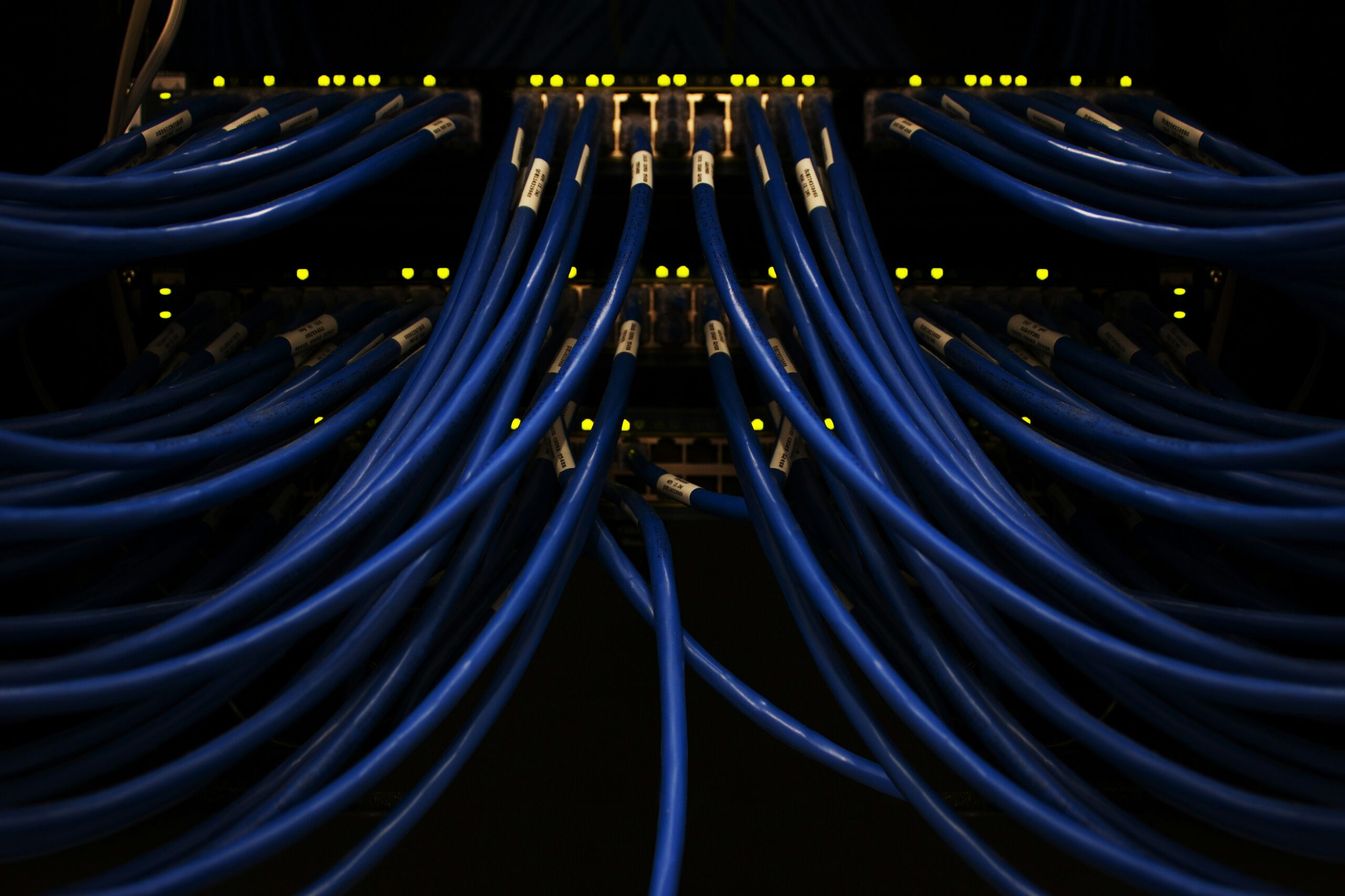

Additionally, performance-sensitive applications struggle in multi-tenant environments, where “noisy neighbors” can pop up unexpectedly and monopolize bandwidth, disk I/O, CPU and other resources.

Finally, while you can purchase cloud-connect links to reach the public cloud and avoid best-efforts internet, connectivity within the distributed hyperscale infrastructure is a big “black box” which creates the potential for lag, performance impairments and in extreme situations, outages.

Reason #2: The public cloud holds the cards, so they make all the decisions.

Enterprises have little say after workloads are migrated to the cloud. Providers own, manage and monitor the entire cloud infrastructure.

Organizations don’t know where their data is operating, let alone who else shares their server. And when there’s an issue, the cloud provider decides how to fix it and when.

miThere’s also little room for customization. Most public cloud providers offer fixed options and terms that may not align with what the organization really needs.

Today’s CIOs and CTOs are turning to Bare Metal servers for true real-time performance and endless customization options.

A bare metal server is 100% dedicated to the business. Organizations never share storage, connection or bandwidth – which ensures maximum performance and eliminates the “noisy neighbor” effect.

Bare metal servers also allow for higher resource utilization. Enterprises don’t have to worry about multi-tenanting overhead or a base operating system hogging disk, memory and processing power.

Enterprises can configure bare metal servers to meet the exact needs of the business. And since the owner has root access to the server, it’s easy to upgrade, add resources and scale on demand.

Put your workloads where they make the most sense.

Organizations that need to improve agility and want to keep their IT team focused on business generating efforts may want to migrate workloads to the cloud. However, bare metal presents a straightforward and convenient option for organizations that need predictable and constant access to resources.