Edge Computing 101: Latency, Location and Why Data Centers Still Matter in the Age of the Edge

On what seems to be a daily basis, there’s no shortage of new additions to the tech lexicon—whether acronyms, buzzwords or concepts. The “edge” (or “edge computing”) is a somewhat recent inclusion, but what’s most noteworthy is its dizzying diversity of definitions. The edge computing definition can vary wildly depending on who’s talking about it and in what context.

What is Edge Computing?

One of the easiest to understand explanations of edge computing can be found in this Data Center Frontier article: “The goal of Edge Computing is to allow an organization to process data and services as close to the end-user as possible.” To take it a step further, you could even say the purpose of edge computing is to provide a better, lower-latency customer experience. (The customer, in this case, is simply defined as whoever needs or requests the data, regardless of what they may be trying to accomplish.)

While much of the discussion around the edge computing focuses solely on the edge itself, a holistic edge computing conversation requires us to take a step back and process the importance of all that leads up to it—namely the data center and network infrastructure that supports the edge. When your applications and end users require low latency, taking a look at your whole infrastructure deployment will be more effective than trying to “fix” performance issues with edge computing.

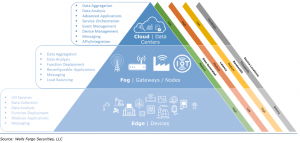

The IT Infrastructure Layers That Get Us to the Edge of Edge Computing

It’s not all about the edge. Here are the layers of IT infrastructure that make or break application performance and end user experience.

Data Centers/Cloud

This layer is made up of the physical buildings and distributed computing resources that act as the foundation of edge computing deployments. You can’t have edge computing without data centers and cloud computing, just as you can’t have a functional kitchen and appliances without plumbing, electricity and four walls to keep Mother Nature out. What’s important at this layer is proximity and scale, i.e., data centers close to end users that have the capacity to supply fast growing data processing and storage requirements.

Gateways/Nodes (aka “Fog”)

Fog, a term coined by Cisco, refers to a decentralized computing infrastructure in which data, compute, storage and applications are distributed in the most logical, efficient place between the edge computing data source and the centralized data center/cloud. The National Institute of Standards and Technology (NIST) only adopted a similar definition in March of this year (2018), which illustrates just how nascent aspects of this model still are. The primary difference to consider when thinking about “fog” versus “edge” is that “fog” is considered “near-edge,” whereas “edge” is considered “on the device.”

Edge

Of all the layers discussed, this one might have the fuzziest definition. For example, from a recent Wells Fargo report: “A user’s computer or the processor inside of an IoT camera can be considered the network edge, but a user’s router, a local edge CDN server, and an ISP are also considered the edge.” Nonetheless, it is safe to think about edge computing in terms of exactly where the intelligence, computing power and communication functions are situated relative to the end user.

Here’s an example from manufacturing: Many machines now have intelligence built in to control and make decisions at the device. This provides the ability to leverage machine learning that will help plant operators identify “normal” and “abnormal” behaviors. It also adds another layer to control logic and makes it possible for smart machines to use data in decision-making. As a result, analytics for large unstructured data sets—like video and audio—can and will increasingly occur at the edge or other places along the network. This will allow manufacturers to detect anomalies for further examination back at the factory command center.

Latency, Edge Computing and the Edge-to-Fog-to-Cloud Continuum

Now that we’ve got definitions out of the way, let’s talk about the underpinning factor of the edge—latency. As we discussed earlier and as referenced in the Data Center Frontier article, “The goal of Edge Computing is to allow an organization to process data and services as close to the end-user as possible.” That’s why achieving the lowest possible latency with compute and data processing is of the essence when it comes to the key value proposition of the edge.

“The Fog” Extends the Cloud Closer to Edge IoT Devices

As you can see in the chart above, latency decreases as you get closer to the edge. That seems pretty simple, right? But there’s a nuance to this that needs to be considered: These layers are all connected and dependent on one another. In other words, you can’t have low-latency edge devices if you do not have the right connectivity further up the chain to the data center and cloud.

As a data center operator and cloud service provider, we’re regularly in conversations with customers and partners about the locations of our 56 global data centers and what kind of latency they can expect to their destinations. High-performance applications require the path from the data center/cloud to the gateway to the edge to have minimal friction (e.g., latency). Thus, if you’re deploying an edge device in my hometown of Chicago, for example, you don’t want the device to be talking to a data center in Los Angeles. You want it, ideally, to be in Chicago. This is true for most workloads and applications today, regardless of IoT or edge capabilities—and it all comes back to providing better, lower-latency customer experiences.

The Edge Is Only as Good as the Rest of Your Network

Whether you’re deploying an IoT project, thinking about how edge computing will impact your business, or simply looking to better understand how to create a better experience for your end users, remember that proximity matters—because latency matters. While pushing edge infrastructure ever closer to end users is one way to reduce network latency, it’s also important to remember that the edge computing is only as good as its upstream infrastructure.

For your critical, latency-dependent applications, close edges aren’t enough. You need an entire network built for reliable connectivity and low latency. INAP has a robust global network engineered for scalability, built with the performance our customers need in mind. On top of that, we have an automated route optimization engine baked into all of our products and services that ensures the best-performing path for your outbound traffic.

Edge computing presents an exciting opportunity to explore just how close the technology can get us to the end user’s device, but high-performance applications need high-performance infrastructure from end to end. If your customers need low latency, the edge can’t make up for a poor network—you have to start with a solid foundation of data centers and cloud infrastructure.